Note: This post is still a bit of a work in progress, as I clean up some wording, grammatical errors, etc. Still, wanted to get this out sooner rather than later.

Introduction:

In my last post, I laid out a list of core tenets for what the social media platform of tomorrow could look like. Here they are again, for reference:

Core Tenets:

- You get one account – that’s it.

- There is no “one” algorithm

- There are no community moderators, only sherpas

- It’s all attribute tags

- AI acts as a helper, NEVER as a creator

- A centralized platform

So, let’s start breaking these down, one by one, starting with…

Tenant #1: You get one account – that’s it.

In the world of new social media platforms, a critical step would be requiring Identity Verification (IDV) from the get go, and using that to ensure that one person can only have one account on the platform.

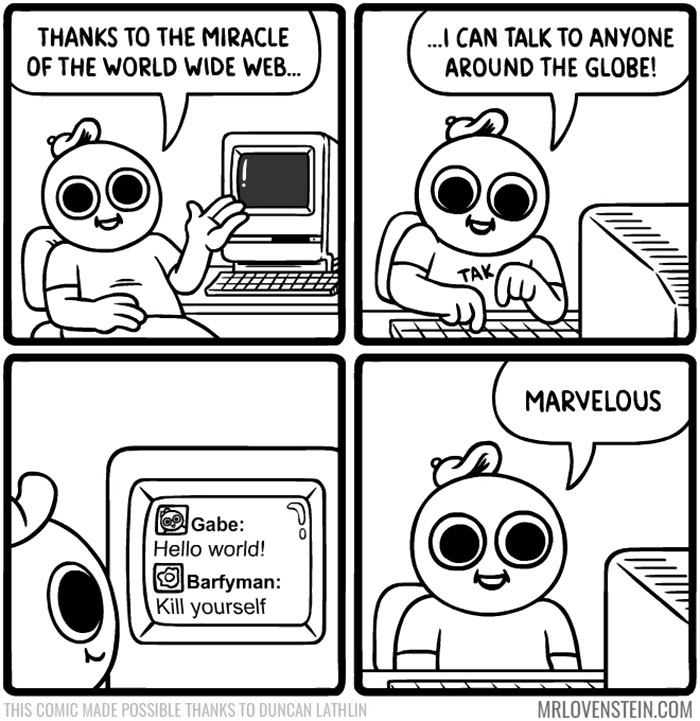

Let me first make myself very clear — I’m not happy about having ID Verification as a requirement. For the longest time, privacy on the internet has been an important concept to many netizens (including myself), and websites that require sending over very personal identifying information is antithetical to that.

That being said, there’s been a growing momentum in requiring sites to do ID Verification. In the past few years, for example, states like Texas and countries like France have enacted laws that now compel adult sites to verify the age of their users via an ID Verification service (no more checkboxes to say “I agree I am over 18”). There also may be growing support for ID Verification more widely as well — a recent Pew Research Center study found that a large majority of U.S. adults (71%) and even a majority of teens (56%) are in favor of social media sites requiring age verification to use their platforms.

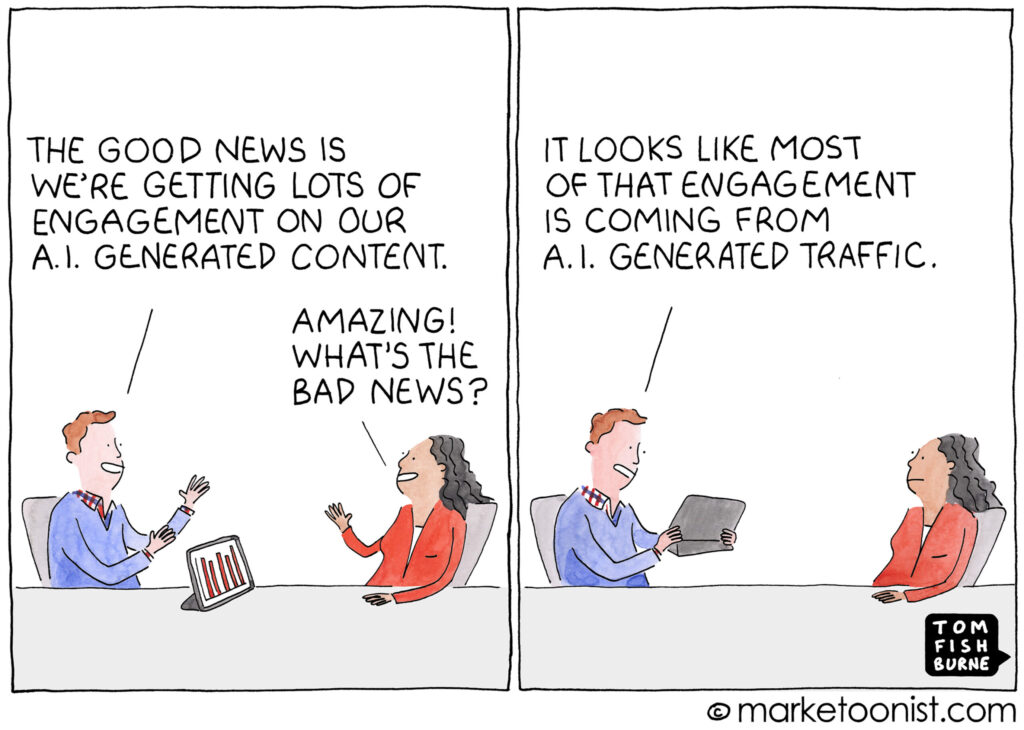

Failing to implement IDV at the start could also come with huge consequences, as there will be no shutting the barn door after the horse has bolted. Looking even at current platforms, Facebook removed 1.1 billion fake accounts in Q3 2024, TikTok removed 6 billion of suspected fake accounts in Q4 2024, and on Reddit, the problem has gotten so pervasive that in some subreddits, AI-generated content may account for up to 45% of posts. The problem isn’t that these platforms aren’t trying; it’s that trying to clean up a platform after it’s already been flooded is an impossible task, and it’s just going to get harder as time goes on.1

So what does this “one human, one account” rule, enforced by IDV, actually achieve? In a nutshell, it’s about three very practical outcomes: establishing trust, verifying details about who you are, and making consequences meaningful:

1. Establishing Trust.

The core issue is that the value of any online community is built on a foundation of trust. That trust is shattered when you can no longer be sure if you are talking to a human. This doesn’t mean the platform has to be human only — in fact, well-designed bots can be incredibly useful. The problem is deception. A verified system solves this by creating clear categories. An account would either be tied to a single, verified human, or it could be a clearly labeled “Bot” account. By knowing the nature of the account you’re interacting with, the specific, trust-destroying betrayal of realizing someone you were debating with was an AI2 is prevented. You can trust that the humans are human, and you can trust that the bots are bots.3

2. Verifying details about who you are

I’ve talked at the beginning about how states like Texas and countries like France are requiring adult sites already to verify you are over 18 on adult websites, and with the growing support amongst adults and teens to do the same, it seems like a no-brainer that ID Verification would help verify that you are old enough to view adult content.

But age gating is not just to prevent those under 18 from viewing NSFW content, it can also be used to help provide a space for people in the same stage of life. When asking about what to do about a problem with your spouse, do you really want someone under 18 commenting about how this is grounds for divorce, when they’ve never even been married? Similarly, do teens want 30-somethings in their community, potentially trying to pretend to be teenagers? Knowing someones age can help with these cases as well.

There’s also much more that can be gleaned from an ID as well, such as your address, which can help solve for the problem mentioned in the problems with social media users, where someone from Texas can comment in a community for a state they have never been, and their “weight” is the same as everyone else. If you knew a user’s location, you could make sure that the weight of their opinion was held in much less regard.4

3. Making consequences meaningful

A core reason our online spaces have become so toxic, as I touched on in the post on the problems with social media users, is the lack of meaningful consequences. On today’s platforms, the moderation team is playing a game of whack-a-mole; they can ban a user for egregious behavior, only for that same person to reappear minutes later with a new account. Tying every human account to a single, verified identity fundamentally changes the dynamic. It gives the platform the power to make its rules stick. If a user breaks the community’s trust in a major way, a ban is no longer a temporary inconvenience — it is an effective and permanent removal from the platform. This ensures the community can actually police itself and maintain a safe environment.

That being said, it is crucial to understand that verification should not mean using your real name. The goal is to ensure each account is tied to a single, real person — not to force everyone to post with their government ID. Users should absolutely retain the ability to use aliases and maintain a separation between their online self and their real-world identity.

Alternatives to the one account policy?

While I believe a mandatory, universal verification system is the most robust solution, it’s worth mentioning an alternative path. If a mandatory system proves to be too great a hurdle, one could design the platform around a “tiered trust” system where verified users have their posts, votes, and comments weighted significantly more (e.g. 10x, 20x the weight of an unverified user, etc.). While I see this as a viable fallback, the core IDV system remains the ideal — this alternative path simply provides a powerful incentive that pushes the platform toward the same goal of ensuring its discourse is driven by real, accountable people.

This is the first piece of the puzzle. Next, we’ll talk about why there shouldn’t be just “one” algorithm. Stay tuned.

Footnotes:

- As an example, with the platform I helped build that got over 5 million users, we tried everything: CAPTCHA, Cloudflare Bot protection, verifying user emails, verifying a phone number, verifying a credit card, blocking disposable emails, blocking IPs/JA4 signatures, you name it. Regardless of what we tried, bad actors continued to be the main source of our headaches, and it was because they could get past all of these barriers (And we weren’t even in the top 50 social media platforms on the web!) ↩︎

- See https://www.engadget.com/ai/researchers-secretly-experimented-on-reddit-users-with-ai-generated-comments-194328026.html, where researchers experimented on Reddit users to see if they could use artificial intelligence to change their view. ↩︎

- Although there would be no stopping someone from using their ID verified account to run a bot account, the consequences for being caught would make this not a problem, as a site-wide ban of their account would be tied to their real identity, making it difficult for them to ever do this again. ↩︎

- There are other ways you can get someone’s location rather than their official government ID, of course, the most common being someone’s IP address, or from the Browser’s Geolocation API. But these have a good amount of ways to fake — the most common ones being VPNs and residential proxies, which allow you to look like you are from a different location than you actually are. ↩︎